Databricks Architecture

Databricks Architecture Overview

High-level architecture

Databricks operates out of a control plane and a data plane as follows -

The control plane includes the backend services that Databricks manages in its own cloud account such as Azure, AWS and GCP. Notebook commands and many other workspace configurations are stored in the control plane and encrypted at rest.

The cloud account(AWS, Azure or GCP) that is in use manages the data plane, and this is where the data resides and data is also processed here.

Considering Azure, Azure Databricks connectors are used to connect clusters to external data sources outside of the Azure account to ingest data, or for storage. Ingesting data is also possible from external streaming data sources, such as events data, streaming data, IoT data, and more.

- Your data is stored at rest in your Azure account or any cloud account in the data plane and in your own data sources, not the control plane, so you maintain control and ownership of your data.

- Job results reside in storage in your account.

- Interactive notebook results are stored in a combination of the control plane (partial results for presentation in the UI) and your Azure/AWS storage. If you want interactive notebook results stored only in your cloud account storage(AZure/AWS), ask your Databricks representative to enable interactive notebook results in the customer account for your workspace.

Clusters :

A cluster is basically a collection of virtual machines in a cluster that is usually a driver node,

which orchestrates the tasks performed by one or more worker nodes.

Clusters allow us to treat this group of computers as a single compute engine via the driver node.

A Databricks cluster is a set of computation resources and configurations on which you run data engineering, data science, and data analytics workloads, such as production ETL pipelines, streaming analytics, ad-hoc analytics, and machine learning.

You run these workloads as a set of commands in a notebook or as an automated job. Databricks makes a distinction between all-purpose clusters and job clusters.

All-purpose clusters vs Job Clusters -

To create the cluster, click on the compute icon on the sidebar which shows list of cluster like below -

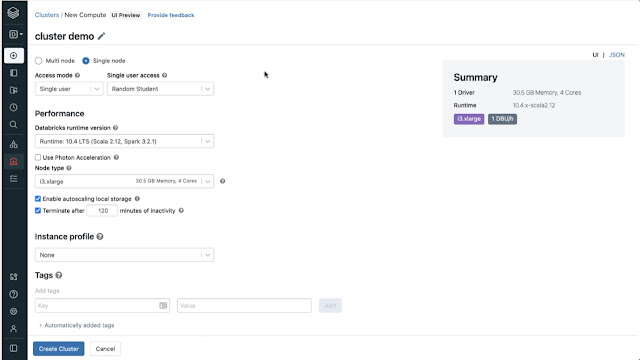

Then click on Create cluster and select the option required -

Depending on the workspace being used, one may or may not have privileges to create the clusters. Check with the platform admin to see if there is already a cluster created which can be connected for use or you need to create a new cluster.

Cluster Configuration :

1. Single Node/Multi Node

Multi node cluster will have one driver node and one or more worker nodes.

When you run a spark job against a multi node cluster, the driver node will distribute the tasks to

run on the worker nodes in parallel and returns the result.

They give us the ability to horizontally scale the cluster depending on your workload.

We can basically keep adding worker nodes as we need. These are the default type of clusters used for spark jobs and suitable for large workloads.

Single node cluster will have only one node- the driver node, and there are no worker nodes.

Even though there are no worker nodes, single node clusters also supports park workloads.

When you run a spark job, the driver node acts as both the driver and the worker as there are no worker nodes. The single node clusters are not horizontally scalable, so they're not suitable for large ETL workloads. They're mainly targeted for lightweight machine learning and data analysis workloads which don't require any distributed compute capacity.

2. Access Mode

3. Databricks Run time

4. Auto Termination

- Terminates the cluster after X minutes of inactivity

- Default value for single node and standard clusters is 120 minutes

- Users can specify a value between 10 and 10000 mins as the duration

5. Auto Scaling

- User specifies the min and max work nodes

- Auto scales between min and max based on the workloads

- Not recommended for streaming workloads

6. Cluster VM Type/size

Memory optimized - Recommended for memory intensive applications

Compute optimized - Structured streaming applications where processing rate is above the input rate at peak times of the day.

Storage optimized - For use cases requiring high disk throughput and I/O

General purpose - Recommended for enterprise grade applications and analytics in-memory caching

GPU accelerated Instance types - Recommended for deep learning models that are data and compute intensive.

7. Cluster Policy

Users could accidentally create clusters which are oversized and too expensive to run. Cluster policies help us avoid these common issues. Administrators can create cluster policies with restrictions and assign them to users or groups.

- cluster policies simplify the user interface, thus enabling standard users to create clusters

- No need for administrators to be involved in every decision.

- Achieves cost control by limiting the maximum size of the clusters.

- Only available on premium tier.

Types

- Job compute

- Personal compute

- Power user compute

- Shared compute

Cluster Pool

Clusters usually take time to start up and alter scale.

In order to minimize that time, we can use pools. A cluster pool is basically a set of idle ready to use virtual machines that allow us to reduce the cluster start and auto scaling times.

Azure Databricks Cluster Pricing

- Depends on workload (All purpose/Job/SQL/Photon)

- Tier(Standard/premium)

- VM Type (General Purpose/GPU/Optimised)

- Purchase plan(Pay as you go /pre-purchased)

A Databricks Units (DBU) is a normalized unit of processing power on the Databricks Lakehouse Platform used for measurement and pricing purposes.

Reference and credits - Databricks.com

Comments

Post a Comment